Care-Providing Robot FRIEND

The care-providing robotic system FRIEND (Functional Robot arm with user-frIENdly interface for Disabled people) is a semi-autonomous robot designed to support disabled and elderly people in their daily life activities, like preparing and serving a meal, or reintegration in professional life. FRIEND make it possible for such people, e.g. patients which are paraplegic, have muscle diseases or serious paralysis, e.g. due to strokes, to perform special tasks in daily life self-determined and without help from other people like therapists or nursing staff.

The robot FRIEND is the third generation of such robots developed at the Institute of Automation (IAT) of University of Bremen within different research projects.[1][2][3][4] Within the last project AMaRob, an interdisciplinary consortium, consisting of technicians, designers as well as therapists and further representatives of various interest groups, influences the development of FRIEND. Besides covering the various technical aspects, also design aspects were included as well as requirements from daily practice given by therapists, in order to develop a care-providing robot that is suitable for daily life activities. The AMaRob project was founded by the German Federal Ministry of Education and Research ("BMBF - Bundesministerium für Bildung und Forschung") within the "Leitinnovation Servicerobotik".

Systems

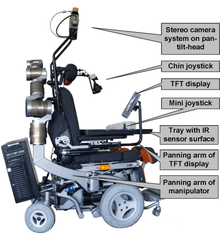

FRIEND is built from reliable industrial components. It is based on the wheelchair platform Nemo Vertical, which is an electrical wheelchair from Meyra. This basic platform has been equipped with various additional components, which are described in the following.

- Robot Arm / Manipulator: The Light Weight Robotic Arm 3 (LWA3) is a 7 degrees of freedom manipulator of Schunk mounted on an automated panning arm. So the arm can park behind the seat in order to navigate FRIEND in narrow passages. The robot arm is equipped with the prosthetic hand "SensorHand Speed" from Otto Bock which has built-in slip sensors in order to detect the slipping of gripped object and adapt the force accordingly. At the robot's wrist a force-torque sensor is mounted to perform force-torque-based reactive manipulative operations and to detect collisions.

- TFT-Display: The TFT display provides visual information to the user and is also mounted on a panning arm.

- Intelligent Tray: In front of the user an intelligent tray is available on which objects can be placed down by the manipulator. This tray is based on infra-red (IR) devices to acquire precise information about object locations, which should be manipulated.

- Stereo Camera System: A Bumblebee 2 stereo camera system with built-in calibration, synchronization and stereo projective calculation features is used to acquire information of the environment. It is mounted at the top of the system on a pan-tilt head unit, which itself is installed on a special rack behind the seat.

- Computer System: A high-end PC unit is mounted on the wheelchair platform behind the user. The mounting as depicted is still in a prototype state.

- Input devices: There are several input devices which are available for FRIEND or under development: chin joystick, hand joystick, speech control (in- and output), brain-computer interface (BCI) and eye control. The input devices are adapted according to the impairments of the user or his preferences.

- Infra-red Communication and Appliances: An infra-red control unit, development by IGEL , for communication with various appliances in the robot's environment is integrated underneath the pan-tilt-head unit. Thus, e.g. an automatic door opening mechanism in the refrigerator and the microwave, the configuration and control of the microwave itself or various consumer electronic components can be operated wireless.

Usage

Within the AMaRob (AMaRob web page ) project three scenarios were developed that support disabled and elderly people in their Activities of Daily Life (ADL) as well as in professional life.

ADL

This scenario enables the user to prepare and eat a meal. A special meal-tray has been designed which can be gripped by the manipulator. First the meal-tray is fetched from a refrigerator which is equipped with an automatic door opener. Then the manipulator puts the meal-tray in a remote controlled microwave oven to cook the meal. After cooking the meal-tray is fetched from the microwave oven and placed on the tray of the wheelchair. After that FRIEND supports the user to eat the meal. With a special designed spoon the manipulator can take a bit meal and feed the user. After the eating procedure the meal-tray is put away. The handles are designed in such a way that they can be robustly recognized by the vision system and easily grasped by manipulator's gripper.

Library

The first professional scenario is situated at a library service desk. Professional scenarios are very important for disabled people from the viewpoint of re-integration into daily life activities. With FRIEND the user can manage tasks like lending and return of books.

Workshop

The second professional scenario takes place in a rehabilitation workshop. The realized scenario is representative for many quality control tasks in industry and consists of checking the functionality of keypads for public telephone boxes. A keypad has to be taken from a keypad magazine by the gripper and put into a test adapter to check the correct working of pad's electronics. After that each keypad has to be inspected visually by the user to detect cracks or similar damages. Based on the results the keypad have to be sorted.

Architecture

The realization of semi-autonomy is based on the central idea to include human tasks into task execution. A good explanation of this principle is given in the following quotation:

- "When we think of interfaces between human beings and computers, we usually assume that the human being is the one requesting that a task be completed, and the computer is completing the task and providing the results. What if this process were reversed and a computer program could ask a human being to perform a task and return the results? [Mechanical Turk - amazon.com]"

With respect to a semi-autonomous service robotic system this means that the user's cognitive capabilities are taken into account, whenever a robust and reliable technical solution is unavailable. It is obvious that the acceptance of such a system will be low in general. But for people that are dependent on a personnel assistant, like the disabled or elderly, this approach offers the opportunity to decrease this dependency and therefore to increase their quality of life. A service robot has to be able to pursue a certain mission goal as commanded from its user but also needs to react flexibly to dynamic changes within the workspace. To meet these requirements, hybrid multi-layer control architectures have been successfully applied.[5][6] These architectures usually consist of three layers:

- A deliberative layer, which contains a task planner to generate a sequence of operations to reach a certain goal with respect to the user's input command.

- A reactive layer, which has access to the system's sensors and actuators and provides reactive behavior which is robust even under environmental disturbances, for example with the help of closed-loop control.

- A sequencer that mediates between deliberator and reactive layer i.e. activates or deactivates reactive operations according to the deliberator's specification.

The software framework MASSiVE (Multi-layer Architecture for Semi-autonomous Service robots with Verified task Execution) [7][8] is a special kind of hybrid multi-layer control architecture which is tailored to the requirements of semi-autonomous and distributed systems, like the care-providing robot FRIEND, acting in environments with distributed smart components. These intelligent wheelchair mounted manipulator systems allow to benefit from the inclusion of the user's cognitive capabilities into task execution and consequently lower the system complexity compared to a fully autonomous system. The semi-autonomous control requires a sophisticated integration of a human-machine-interface (HMI) which is able to couple input devices according to the user's impairment,[9] for example a haptic suit, eye-mouse, speech-recognition, chin joystick or a brain-computer interface (BCI).[10][11] The resulting MASSiVE control architecture with special emphasis on the HMI component is depicted in Fig. 2. Here, the deliberator has been moved to the sequencer component, and the HMI has direct access to control the actuators in the reactive layer during user interactions (e.g., to move the camera, until the desired object to be manipulated is in field of view).

Besides the focus on semi-autonomous system control, the MASSiVE framework includes a second main paradigm, namely the pre-structuring of task knowledge. This task planner input is specified offline in a scenario and model driven approach with the help of so-called process-structures on two levels of abstraction, the abstract level and the elementary level.[12] After specification and before being used for task execution, the task knowledge is verified offline, to guarantee a robust runtime behavior. This development process model provides a structured guidance and enforce consistency throughout the whole process, so that uniform implementations and maintainability are achieved. Furthermore, it guides through development and test of system core functionality (skills). The whole paradigm is depicted in Fig. 3.

The tasks is selected and started by the user via the HMI, depicted in Fig. 4, on a high level of abstraction, e.g. "cook meal". After initial monitoring to define the current state of the system and the environment, the tasks execution is performed and a list of elementary operations are created which can be executed autonomously by the system. These elementary operations consists of, e.g. image processing algorithm to recognize objects in the environment or manipulative algorithms to calculate a special trajectory to grasp an object.

Besides these layers, a world model is included in the control architecture that contains the current system's perspective on the world according to the task to be executed. Due to the hybrid architecture a separation of world-model data into two categories is mandatory: The deliberator operates with symbolic object representations (e.g. "C" for the representation of a cup), while the reactive layer deals with the sensor percepts taken from these objects, so-called sub-symbolic information. Examples for sub-symbolic information are the color, size, shape, location or weight of an object.

Image processing

The main problem with service robotic systems such as the care-providing robot FRIEND is that they have to operate in dynamic surroundings where the state of the environment is unpredictable and changes stochastically, hence two main problems have been encountered when developing image processing systems for service robotics: unstructured environment and variable illumination conditions. They have to cope with a large amount of visual information and for the implementation of the vision system a high degree of complexity is necessary. A second major problem in robot vision is the wide spectrum of illumination conditions that appear during the on-line operation of the machine vision system, since colors are one important attribute in object recognition. The human visual system has the ability to compute color descriptors that stay constant even in variable illumination conditions, which is not the case for machine vision systems. A key requirement in this field is the reliable recognition of objects in the robot's camera image, extraction of object features from the images and, based on the extracted features, subsequent correct object localization in a complex 3D environment so that these information can be used for reliable object grasping and manipulation.

In order to cope with the above described problems in the care-providing robot FRIEND the special vision system ROVIS (RObust machine VIsion for Service robotics) [13][14] was developed. The structure of ROVIS is depicted in Fig. 5. There are two main ROVIS components: hardware and object recognition and reconstruction chain. The connection between ROVIS and the overall robot control system is represented by the World Model where ROVIS stores the processed visual information. At the initialization phase of ROVIS the extrinsic camera parameters needed for 3D object reconstruction and the transformation between stereo camera and manipulator which is necessary for vision based object manipulation are calculated by the Camera Calibration module. The object recognition and reconstruction chain consists of robust algorithms for object recognition and 3D reconstruction for the purpose of reliable manipulation motion planning and grasping in unstructured environments and variable illumination conditions. Therefore, an accuracy of 5mm for the estimated 3D pose is necessary which enforces very good and precise algorithms. In ROVIS, robustness must be understood as the capability to the system to adapt to varying operational conditions and is realized through the inclusion feedback mechanisms at the image processing level and also between different hardware and software components of the vision system.[15] A core part of the system is the automatic, closed-loop calculation of an image Region of Interest (ROI) on which vision methods are applied. By using a ROI the performance of object recognition and 3D reconstruction can be improved since the scene complexity is reduced.

Within ROVIS there are several methods used for object recognition, e.g. robust region based color segmentation [16] and robust edge detection. The first one is for objects with uniform color and without texture (e.g. bottle, glass, handles) and the second one for objects with textures (e.g. books). In order to recognize objects in FRIEND's environment robustly special feature are extracted which are used to identify objects and to determine their pose.[17] For big objects like refrigerator or microwave an improved SIFT (Scale Invariant Feature Transform) algorithm is used, which was developed at the Institute of Automation at the University of Bremen [18]

The ROVIS framework uses the configuration tool ImageNets to rapidly create new Machine Vision skills for the FRIEND robot.

Motion planning

Primary task of the motion planning is to find a collision free trajectory. Nevertheless, in service robotics, other aspects must be additionally taken into account like safety and smoothness of the trajectories. In order to suffice all these requirements for FRIEND a sensor-based motion planning for the manipulator was developed. The procedure is based on Cartesian space information,[19] and therefore computationally efficient as well as with a high precision. The object grasping frames are calculated on-line which increases the flexibility of the system during execution. All implemented algorithms are suitable for real-time applications in service robotics but also for industrial usage.

The motion planning is performed based on a Mapped Virtual Reality (MVR), displayed in Fig. 6. This 3D-model of the environment is built from the information within the world model which was perceived by the sensors. Before any motion of the robot arm is performed the trajectory from the current to the goal configuration is calculated within this 3D-model. During motion on-line collision checking is done, since obstacles can move during motion. Obstacles are in this case all objects of the environment, which are not included in the current motion, also in some tasks the user in the wheelchair. It is important that there is at any time no danger for the user.

At the wrist of the manipulator a force-torque-sensor is installed. The information from this sensor is used to detect collisions or for fine-tuning during manipulative operations, e.g. when the robot arm should put a gripped object in a small opening. This ensures robustness during execution.

In general the trajectories calculated from the motion planning algorithms are robot like, i.e. clipped and jerky. In order to enhance this and make the movement of the manipulator more pleasant to the user the trajectories are smoothed and the quality is enhanced.[20] Therefore, the used robot arm is helpful. Since six degrees of freedom are sufficient to define a 3D pose in the environment (three degrees for position and three degrees for orientation) and FRIEND's manipulator has seven degrees of freedom, one degree of freedom can be chosen optional, i.e. the manipulator can turn its elbow joint by 360 degrees without changing gripper's pose. This can be used to find the best trajectory from a start to a goal configuration among an infeasible set of possible configurations. This seventh degree of freedom is also used to solve and avoid dead-locks during the motion process and to keep a minimal distance to obstacles.

References

- ↑ Martens, C.; Prenzel, O.; Gräser, A. (2007). "The Rehabilitation Robots FRIEND-I & II: Daily Life Independency through Semi-Autonomous Task-Execution". I-Tech Education and Publishing. Vienna, Austria: 137–162. ISBN 978-3-902613-04-2. Archived from the original on 2010-06-13.

- ↑ Ivlev, O.; Martens, C.; Gräser, A. (2005). "Rehabilitation Robots FRIEND-I and FRIEND-II with the dexterous lightweight manipulator". Restoration of Wheeled Mobility in SCI Rehabilitation. 17.

- ↑ Volosyak, I.; Ivlev, O.; Gräser, A. (2005). "Rehabilitation robot FRIEND II - the general concept and current implementation". Proc. 9th International Conference on Rehabilitation Robotics ICORR 2005: 540–544.

- ↑ Volosyak, I.; Kuzmicheva, O.; Ristic, D.; Gräser, A. (2005). "Improvement of visual perceptual capabilities by feedback structures for robotic system FRIEND". IEEE Trans. Syst. , Man., Cybern. C. 35 (1): 66–74. doi:10.1109/TSMCC.2004.840036.

- ↑ Schlegel, C.; Wörz, R. (1999). "The software framework smartsoft for implementing sensorimotor systems.". In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems. Korea: 1610–1616.

- ↑ Simmons, R. (2000). "Architecture, the backbone of robotic systems". In Proceedings of the 2000 IEEE International Conference on Robotics and Automation. San Francisco, CA.

- ↑ Martens, C. (2003). "Teilautonome Aufgabenbearbeitung bei Rehabilitationsrobotern mit Manipulator - Konzeption und Realisierung eines softwaretechnischen und algorithmischen Rahmenwerkes.". PhD dissertation, University of Bremen, Faculty I: Physics / Electrical Engineering. (In German). Bremen.

- ↑ Martens, C.; Prenzel, O.; Feuser, J.; Gräser, A. (2006). "MASSiVE: Multi-Layer Architecture for Semi-Atunomous Service Robots with Verified Task Execution.". In Proceedings of 10th International Conference on Optimization of Electrical and Electronic Equipments - OPTIM 2006. Brasov, Romania: Transylvania University Press, Brasov. 3: 107–112. ISBN 973-635-702-3.

- ↑ Prenzel, O.; Martens, C.; Cyriacks, M.; Wang, C.; Gräser, A. (2007). "System-controlled user interaction within the service robotic control architecture massive". Robotica, Special Issue. Cambridge University Press. 25 (2): 237–244. ISSN 0263-5747.

- ↑ Lüth, T.; Graimann, B.; Valbuena, D.; Cyriacks, M.; Ojdanic, D.; Prenzel, O.; Gräser, A. (2003). "A Brain-Computer Interface for Controlling a Rehabilitation Robot". In BCI Meets Robotics: Challenging Issues in Brain-Computer Interaction and Shared Control. Leuven, Belgium: 19–20.

- ↑ Lüth, T.; Graimann, B.; Valbuena, D.; Cyriacks, M.; Ojdanic, D.; Prenzel, O.; Gräser, A. (2003). "A Low Level Control in a Semi-autonomous Rehabilitation Robotic System via a Brain-Computer Interface". In Proceedings of 10th International Conference on Rehabilitation Robotics - ICORR. Nordwijk, The Netherlands.

- ↑ Prenzel, O. (2009). "Process Model for the Development of Semi-Autonomous Service Robots" (PDF). PhD dissertation, University of Bremen, Faculty I: Physics / Electrical Engineering. Shaker Verlag GmbH, Germany. ISBN 978-3-8322-8424-4.

- ↑ Grigorescu, S. M. (2010). "Robust Machine Vision for Service Robotics". PhD dissertation. University of Bremen, Faculty I: Physics / Electrical Engineering, Germany.

- ↑ Grigorescu, S. M.; Ristic-Durrant, D.; Gräser, A. (2009). "RObust machine VIsion for Service robotic system FRIEND.". In Proceedings of the 2009 International Conference on Intelligent RObots and Systems (IROS). St. Louis, USA.

- ↑ Grigorescu, S. M.; Ristic-Durrant, D.; Vupalla, S. K.; Gräser, A. (2008). "Closed-Loop Control in Image Processing for Improvement of Object Recognition". In Proceedings of the 17th IFAC World Congress. Seoul, Korea.

- ↑ Vuppala, S. K.; Grigorescu, S. M.; Ristic-Durrant, D.; Gräser, A. (2007). "Robust Color Object Recognition for a Service Robotic Task in the System FRIEND II". In Proceedings of the 10th International Conference on Rehabilitation Robotics - ICORR Conference. Noordwijk, The Netherlands.

- ↑ Grigorescu, S. M.; Ristic-Durrant, D. (2008). "Robust Extraction of Object Features in the System FRIEND II". In Methods and Applications in Automation. Shaker Verlag.

- ↑ Alhwarin, F.; Wang, C.; Ristic-Durrant, D.; Gräser, A. (2008). "Improved SIFT-Features Matching for Object Recognition". BCS International Academic Conference 2008. Visions of Computer Science: 179–190.

- ↑ Ojdanic, D. (2009). "Using Cartesian Space for Manipulator Motion Planning - Application in Service Robotics" (PDF). PhD dissertation, University of Bremen, Faculty I: Physics / Electrical Engineering. Bremen: Shaker Verlag GmbH. ISBN 3-8322-8176-2.

- ↑ Ojdanic, D.; Gräser, A. (2009). "Improving the Trajectory Quality of a 7 DoF Manipulator". In Proceedings of the Robotic Conference. Munich, Germany.

External links

- Webpage FRIEND

- Webpage AMaRob

- Institute of Automation (IAT)

- University of Bremen

- Meyra GmbH & Co. KG

- SCHUNK GmbH & Co. KG

- Otto Bock Healthcare

- IGEL GmbH

- Stiftung Friedehorst

- Institut für integriertes Design

- "Leitinnovation Servicerobotik"

- BMBF