Foreground detection

Foreground detection is one of the major tasks in the field of Computer Vision whose aim is to detect changes in image sequences. Many applications do not need to know everything about the evolution of movement in a video sequence, but only require the information of changes in the scene.

Detecting foreground to separate these changes taking place in the foreground of the background. It is a set of techniques that typically analyze the video sequences in real time and are recorded with a stationary camera.

Description

All detection techniques are based on modelling the background of the image, i.e. set the background and detect which changes occur. Defining the background can be very difficult when it contains shapes, shadows, and moving objects. In defining the background it is assumed that the stationary objects could vary in color and intensity over time.

Scenarios where these techniques apply tend to be very diverse. There can be highly variable sequences, such as images with very different lighting, interiors, exteriors, quality, and noise. In addition to processing in real time, systems need to be able to adapt to these changes.

A very good foreground detection system should be able to:

- Develop a background (estimate) model.

- Be robust to lighting changes, repetitive movements (leaves, waves, shadows), and long-term changes.

Techniques

Detecting foreground has been a problem long studied in the field of computer vision. There are many techniques that address this problem, all based on the duality of dynamic and stationary background.

Temporal average filter

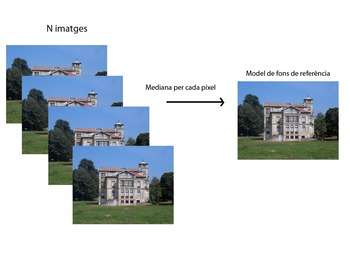

It is a method that was proposed and the Velastin. This system estimates the background model from the median of all pixels of a number of previous images. The system uses a buffer with the pixel values of the last frames to update the median for each image.

To model the background, the system examines all images in a given time period called training time. At this time we only display images and will fund the median, pixel by pixel, of all the plots in the background this time.

After the training period for each new frame, each pixel value is compared with the input value of funds previously calculated. If the input pixel is within a threshold, the pixel is considered to match the background model and its value is included in the pixbuf. Otherwise, if the value is outside this threshold pixel is classified as foreground, and not included in the buffer.

This method can not be considered very efficient because they do not present a rigorous statistical basis and requires a buffer that has a high computational cost.

See also

References

External links

- Background subtraction by R. Venkatesh Babu

- Foreground Segmentation and Tracking based on Foreground and Background Modeling Techniques by Jaume Gallego

- Detecció i extracció d’avions a seqüències de vídeo by Marc Garcia i Ramis