Memory virtualization

In computer science, memory virtualization decouples volatile random access memory (RAM) resources from individual systems in the data center, and then aggregates those resources into a virtualized memory pool available to any computer in the cluster. The memory pool is accessed by the operating system or applications running on top of the operating system. The distributed memory pool can then be utilized as a high-speed cache, a messaging layer, or a large, shared memory resource for a CPU or a GPU application.

Description

Memory virtualization allows networked, and therefore distributed, servers to share a pool of memory to overcome physical memory limitations, a common bottleneck in software performance. With this capability integrated into the network, applications can take advantage of a very large amount of memory to improve overall performance, system utilization, increase memory usage efficiency, and enable new use cases. Software on the memory pool nodes (servers) allows nodes to connect to the memory pool to contribute memory, and store and retrieve data. Management software and the technologies of memory overcommitment manage shared memory, data insertion, eviction and provisioning policies, data assignment to contributing nodes, and handles requests from client nodes. The memory pool may be accessed at the application level or operating system level. At the application level, the pool is accessed through an API or as a networked file system to create a high-speed shared memory cache. At the operating system level, a page cache can utilize the pool as a very large memory resource that is much faster than local or networked storage.

Memory virtualization implementations are distinguished from shared memory systems. Shared memory systems do not permit abstraction of memory resources, thus requiring implementation with a single operating system instance (i.e. not within a clustered application environment).

Memory virtualization is also different from storage based on flash memory such as solid-state drives (SSDs) - SSDs and other similar technologies replace hard-drives (networked or otherwise), while memory virtualization replaces or complements traditional RAM.

Benefits

- Improves memory utilization via the sharing of scarce resources

- Increases efficiency and decreases run time for data intensive and I/O bound applications

- Allows applications on multiple servers to share data without replication, decreasing total memory needs

- Lowers latency and provides faster access than other solutions such as SSD, SAN or NAS

Products

- RNA networks Memory Virtualization Platform - A low latency memory pool, implemented as a shared cache and a low latency messaging solution.

- ScaleMP - A platform to combine resources from multiple computers for the purpose of creating a single computing instance.

- Wombat Data Fabric – A memory based messaging fabric for delivery of market data in financial services.

- Oracle Coherence is a Java-based in-memory data-grid product by Oracle

- AppFabric Caching Service is a distributed cache platform for in-memory caches spread across multiple systems, developed by Microsoft.

- IBM Websphere extremeScale is a Java-based distributed cache much like Oracle Coherence

- GigaSpaces XAP is a Java based in-memory computing software platform like Oracle Coherence and VMWare Gemfire

Implementations

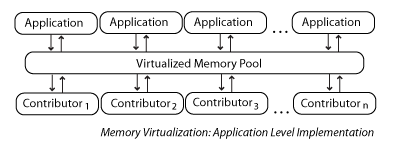

Application level integration

In this case, applications running on connected computers connect to the memory pool directly through an API or the file system.

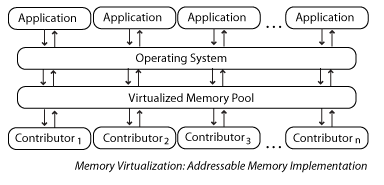

Operating System Level Integration

In this case, the operating system connects to the memory pool, and makes pooled memory available to applications.

Background

Memory virtualization technology follows from memory management architectures and virtual memory techniques. In both fields, the path of innovation has moved from tightly coupled relationships between logical and physical resources to more flexible, abstracted relationships where physical resources are allocated as needed.

Virtual memory systems abstract between physical RAM and virtual addresses, assigning virtual memory addresses both to physical RAM and to disk-based storage, expanding addressable memory, but at the cost of speed. NUMA and SMP architectures optimize memory allocation within multi-processor systems. While these technologies dynamically manage memory within individual computers, memory virtualization manages the aggregated memory of multiple networked computers as a single memory pool.

In tandem with memory management innovations, a number of virtualization techniques have arisen to make the best use of available hardware resources. Application virtualization was demonstrated in mainframe systems first. The next wave was storage virtualization, as servers connected to storage systems such as NAS or SAN in addition to, or instead of, on-board hard disk drives. Server virtualization, or Full virtualization, partitions a single physical server into multiple virtual machines, consolidating multiple instances of operating systems onto the same machine for the purpose of efficiency and flexibility. In both storage and server virtualization, the applications are unaware that the resources they are using are virtual rather than physical, so efficiency and flexibility are achieved without application changes. In the same way, memory virtualization allocates the memory of an entire networked cluster of servers among the computers in that cluster.

See also

- Virtual memory - Traditional memory virtualization on a single computer, typically using the translation lookaside buffer (TLB) to translate between virtual and physical memory addresses

- In-memory database - Provides faster and more predictable performance than disk-based databases

- I/O virtualization - Creates virtual network and storage endpoints which allow network and storage data to travel over the same fabrics (XSigo I/O Director)

- Storage virtualization - Abstracts logical storage from physical storage (NAS, SAN, File Systems (NFS, cluster FS), Volume Management, RAID)

- RAM disk - Virtual storage device within a single computer, limited to capacity of local RAM.

- InfiniBand

- 10 Gigabit Ethernet

- Distributed shared memory

- Remote direct memory access (RDMA)

- Locality of reference

- Single-system image

- Distributed cache

References

- Oleg Goldshmidt, Virtualization: Advanced Operating Systems

- "Startup RNA Networks Virtualizes Memory Across Multiple Servers". InformationWeek. February 13, 2009. Retrieved March 24, 2009.

- "Five Virtualization Trends to Watch". ComputerWorld. February 3, 2009. Retrieved March 24, 2009.

- "RNA networks and Memory Virtualization". ZDnet. February 2, 2009. Retrieved March 24, 2009.

- Kusnetzky, Dan (January 28, 2007). "Sorting out the different layers of virtualization". ZDnet. Retrieved March 24, 2009.