Successive parabolic interpolation

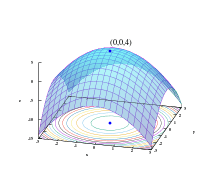

Successive parabolic interpolation is a technique for finding the extremum (minimum or maximum) of a continuous unimodal function by successively fitting parabolas (polynomials of degree two) to a function of one variable at three unique points or, in general, a function of n variables at 1+n(n+3)/2 points, and at each iteration replacing the "oldest" point with the extremum of the fitted parabola.

Advantages

Only function values are used, and when this method converges to an extremum, it does so with an order of convergence of approximately 1.325. The superlinear rate of convergence is superior to that of other methods with only linear convergence (such as line search). Moreover, not requiring the computation or approximation of function derivatives makes successive parabolic interpolation a popular alternative to other methods that do require them (such as gradient descent and Newton's method).

Disadvantages

On the other hand, convergence (even to a local extremum) is not guaranteed when using this method in isolation. For example, if the three points are collinear, the resulting parabola is degenerate and thus does not provide a new candidate point. Furthermore, if function derivatives are available, Newton's method is applicable and exhibits quadratic convergence.

Improvements

Alternating the parabolic iterations with a more robust method (golden section search is a popular choice) to choose candidates can greatly increase the probability of convergence without hampering the convergence rate.

See also

- Inverse quadratic interpolation is a related method that uses parabolas to find roots rather than extrema.

- Simpson's rule uses parabolas to approximate definite integrals.

References

Michael Heath (2002). Scientific Computing: An Introductory Survey (2nd ed.). New York: McGraw-Hill. ISBN 0-07-239910-4.